How to find degrees of freedom (DF) in statistics that the number of independent values that can vary in an investigation without breaking any constraints. It is a vital notion that seems in several contexts throughout statistics including hypothesis tests, probability distributions, and regression analysis. Discover how this basic concept affects the power and precision of your statistical evaluation!

In this blog post, I bring this concept to life in an intuitive manner. I’ll begin with defining degrees of freedom. However, I’ll immediately move on to practical examples in an assortment of contexts since they make this concept easier to understand.

Definition of Degrees of Freedom

You can also think of it as the number of values that are free to change as you estimate parameters. I understand, it’s beginning to seem a little murky!

Levels of freedom encompass the idea that the quantity of independent information which you have limits the number of parameters that you could estimate. Typically, the levels of freedom equal your sample size minus the number of parameters that you want to calculate during an analysis. It’s usually a positive whole number.

Levels of freedom are a combination of how much data you’ve got and how many parameters you need to estimate. It indicates how much independent information goes into a parameter estimate. In this vein, it’s easy to realize that you want a lot of information to enter parameter estimates to obtain more precise quotes and more powerful hypothesis tests. So, you want many levels of freedom!

Independent Information and Constraints on Values

The definitions discuss separate information. You may think this pertains to the sample dimensions, but it’s a little more complicated than that. To understand why we need to discuss the freedom to change.

Suppose we gather the random sample of observations shown below. Now, imagine we understand the mean, but we don’t know the value of observation the X in the table below.

The expression is 6.9, and it’s predicated on 10 values. Thus, we know that the values must amount to 69 according to the equation for the expression.

Using simple algebra (64 + X = 69), we know that X must equal 5.

Estimating Parameters Imposes Constraints on the Info

As you can see, that last number doesn’t have any freedom to change. It is not an independent object of information since it cannot be any other price. Estimating the parameter, the mean, in this case, imposes a constraint on the freedom to vary. The previous price and the mean will be completely dependent on each other. Consequently, after estimating the expression, we have only 9 separate pieces of information, even though our sample size is 10.

That’s the basic idea for levels of freedom in statistics. In an overall sense, DF will be the number of observations in a sample that are absolutely free to change while estimating statistical parameters. You can even think of it because of the amount of independent data that you can use to estimate a parameter.

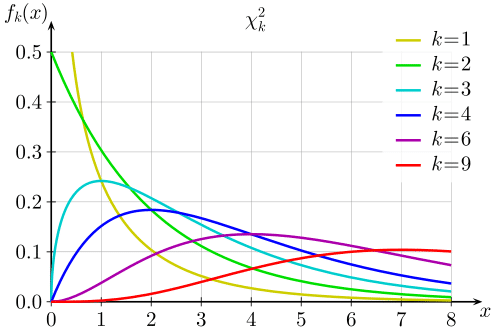

Degrees of freedom also specify the probability distributions for the test statistics of various hypothesis tests. For instance, hypothesis tests utilize the t-distribution, F-distribution, and the chi-square distribution to ascertain statistical significance. Each of these probability distributions is a family of distributions in which the levels of freedom define the shape. So, the DF direct link to p-values through these distributions!

More For You: